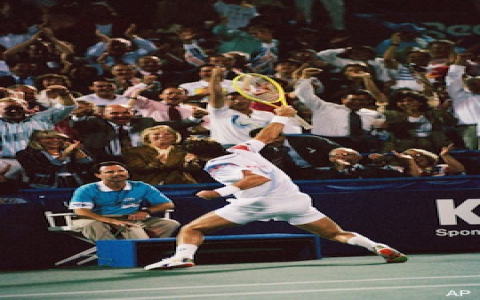

Okay, so yesterday I was messing around with trying to figure out how to get some data from this old Jimmy Connors tennis site. You know, just for kicks. I remember watching him play when I was a kid. Total legend.

First thing I did, fired up my browser and went to the site. Right-clicked and went to “Inspect”. Looked at the HTML source. What a mess! Tables everywhere. Figured I could probably scrape it, but thought, nah, let’s see if there’s an easier way.

Next, I started poking around for an API. No luck. Figured as much. This site looks like it hasn’t been updated since, like, 1998. But hey, gotta try, right?

So, back to the drawing board. I thought about using Python with Beautiful Soup to parse the HTML. That’s usually my go-to. But then I remembered hearing about this tool called “jq” for command-line JSON processing. I was thinking, maybe I could use `curl` to grab the HTML, convert it to JSON somehow, and then use `jq` to extract the data I wanted. Sounded kinda crazy, but worth a shot.

First, I installed `jq`. On my Mac, it was just a `brew install jq`. Easy peasy.

Then, I tried a simple `curl` command to grab the HTML of one of the player pages. Got a bunch of HTML back, as expected. Now, how to turn this into JSON?

That’s where things got tricky. I found this online tool that claimed to convert HTML to JSON. I pasted the HTML in, and it gave me this massive JSON object. It was…ugly. Like, REALLY ugly. But, technically, it was JSON.

Okay, so I piped the `curl` output to this online converter, downloaded the JSON, and then tried using `jq` to find some specific data. Something like `jq ‘.*[0].tr[1].td[2].text’ my_jimmy_*`. Didn’t work. The JSON structure was too complicated and unpredictable. Plus, relying on an external website to convert HTML to JSON was a no-go.

Scratch that. Back to the Python idea. I installed `beautifulsoup4` and `requests`. Wrote a quick script:

python

import requests

from bs4 import BeautifulSoup

url = “the_jimmy_connors_tennis_website” # I’m not putting the real URL here

response = *(url)

soup = BeautifulSoup(*, ‘*’)

# Then I started digging through the soup.

# print(*()) # This is crucial for understanding the HTML structure.

# I needed to find the correct table that contained the data.

# This involved a lot of trial and error, inspecting the HTML, and figuring out the table structure.

# I won’t bore you with all the details, but eventually, I found the right table.

# Let’s say the table was the first table on the page (it probably wasn’t).

table = *_all(‘table’)[0]

# Now I iterated through the rows and columns to get the data.

for row in *_all(‘tr’):

cells = *_all(‘td’)

if cells: # Make sure the row has cells (to avoid header rows)

data = [*() for cell in cells]

print(data)

This got me SOMETHING. But it was still messy. Lots of empty strings and weird characters. I had to add some more code to clean up the data. Used `strip()` to remove whitespace, and filtered out empty strings.

After a few hours of tweaking, I finally got a semi-decent output. It wasn’t perfect, but it was usable. I could take this data and load it into a spreadsheet or database.

Lessons learned:

- Sometimes the simplest tools are the best (Beautiful Soup).

- Don’t be afraid to experiment with different approaches (jq, HTML to JSON converters).

- Data cleaning is ALWAYS necessary when scraping websites.

- Old websites are a pain in the butt.

So, yeah, that was my Jimmy Connors data scraping adventure. Not a huge win, but I learned a few things, and got to relive some childhood memories of watching a tennis legend. Maybe next time I’ll try a different site. Or maybe I’ll just watch some old matches on YouTube.