Alright, let’s dive into this Aleksandar Kovacevic prediction thing I messed around with last week. It was a bit of a rabbit hole, but hey, that’s how we learn, right?

So, it all started with seeing some buzz online about this Kovacevic guy and his, uh, “predictions.” Honestly, it sounded like a load of BS at first, but I was bored and had some free time, so I figured, why not see if there’s anything to it? My goal wasn’t to like, make a fortune or anything, just to see if I could even replicate anything resembling his claims. Consider it a fun little coding project with a dash of skepticism.

First thing I did was try to find out what the heck he even does. Turns out, nailing down exactly what his “methodology” is, is like trying to grab smoke. Lots of vague stuff about “algorithms” and “deep learning” and all that jazz. Typical guru stuff, you know? But, after digging through a bunch of forums and dodgy websites, I pieced together that he seems to focus on football (soccer) match outcomes, particularly goals scored. Apparently, he looks at historical data, team stats, and maybe some voodoo magic, who knows?

Next step: Data. You can’t predict anything without data, right? I spent a good chunk of a day scraping data from various sports statistics websites. I’m talking about past match results, team form, player stats, even some weird stuff like weather conditions on match day. Ended up with a massive CSV file that was a total mess. Cleaning that thing up was half the battle. Used Python with Pandas, of course. Who uses anything else for data cleaning?

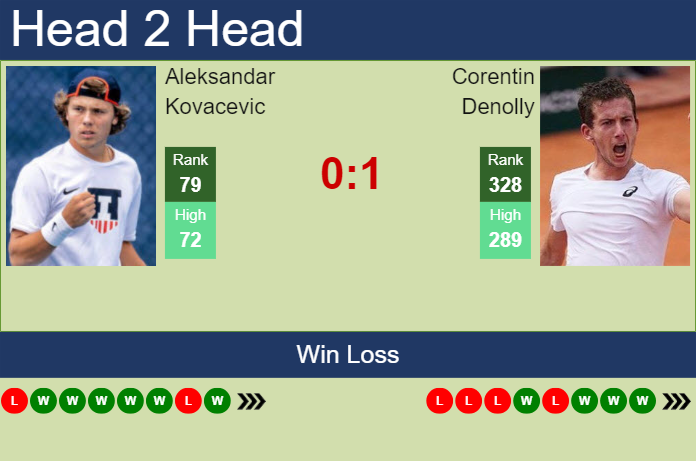

Okay, data acquired and semi-cleaned. Now for the “algorithm.” Since I couldn’t find any actual details about Kovacevic’s secret sauce, I decided to just wing it. I figured, let’s start simple. I built a basic model using scikit-learn. Just a logistic regression, nothing fancy. The features I used were things like: team’s average goals scored in the last 5 games, average goals conceded, win/loss ratio, and the head-to-head record between the two teams. Trained it on a couple of seasons worth of data.

Time to test it! I picked a recent weekend’s worth of football matches and fed the data into my model. It spat out some predictions, mostly probabilities of each team winning, drawing, or losing. Converted those probabilities into predicted scores (roughly). Then I compared my predictions to the actual results. Brace yourself…

The results were, uh, underwhelming. Like, really underwhelming. My model was right maybe 30% of the time? Which is barely better than random guessing. Most of the time it was completely off. It was consistently wrong on games that had upsets or unexpected results. So much for my “deep learning” football prediction empire.

But, I wasn’t ready to give up completely. I messed around with the model parameters, added more features (corner kicks, shots on target, possession), and even tried a different model (a simple neural network). The results improved slightly, but still nowhere near anything I’d consider “reliable.” Maybe 40% accuracy at best. I tried betting on my “predictions” with virtual money, and let’s just say I’d be broke if it were real.

So, what’s the takeaway? Well, first, predicting football is HARD. There’s so much randomness involved, it’s practically impossible to get it right consistently. Second, these “prediction gurus” like Kovacevic are probably full of it. They might have some clever algorithms, but I doubt they’re as accurate as they claim. And third, it was a fun little project and I learned a few things about data analysis and machine learning along the way. Plus, I got to waste a weekend doing something completely pointless, which is always a bonus.

Would I recommend trying to replicate Kovacevic’s predictions? Nah, probably not. Unless you have a lot of time and money to waste. But hey, if you do decide to give it a shot, let me know if you have any better luck. I’m genuinely curious.

That’s my story. Hope you found it mildly entertaining. Maybe next time I’ll try predicting something less random, like the weather. Or the lottery. Actually, scratch that.